Artificial Intelligence.

A New Dawn

There is an atmosphere of fear surrounding artificial intelligence. Public discourse is saturated with claims that this technology will soon surpass human intelligence and render civilisation unrecognisable within a decade. These narratives are not emerging in a vacuum; they are shaped by economics, power, and perception.

In the United States, home to many of the world’s wealthiest individuals and most powerful corporations, AI development is backed by extraordinary levels of private capital. Hundreds of billions of dollars are being invested in models, data centres, and energy infrastructure. Inevitably, this raises concern about concentration of influence. AI development is not merely technical; it is political. Cynically, it can be viewed as a mechanism through which existing power structures preserve relevance, profitability, and leverage, while simultaneously shaping policy through economic dependency and national competitiveness.

This does not negate the genuine advancements or long-term potential of AI and robotics. There are clear benefits for human progress. However, several foundational principles must remain in view.

AI is a human creation. It did not arrive fully formed from “off-planet”, to use a NASA term. It is expensive to build and operate, and at present only a narrow group of organisations possess the capital, data access, and infrastructure required to advance it at scale. The underlying economics are familiar. Why invest?

Firstly, to generate profit.

Secondly, to protect influence and a position of power.

Thirdly, and potentially, to improve human life and society.

These drivers are not equal in practice. When investments fail to generate returns, and losses accumulate, even the wealthiest investors withdraw. Capital is reallocated. This pattern has repeated across every major technological cycle. AI is not exempt.

Fear plays a functional role here. Governments are warned they will “fall behind.” Populations are told control will be lost. These narratives accelerate funding, loosen regulation, and secure long-term investment. Technology companies require sustained capital, and urgency is an effective lever.

Against this backdrop, it is essential to remember that AI is a tool. It is the latest phase in a vast technological continuum. Technology advances; it does not reverse. The difference today is the degree of dependency modern civilisation has developed toward its tools. AI represents a significant leap in that dependency.

The rhetoric is often extreme: mass unemployment, autonomous warfare, algorithmic control of global finance, human redundancy, eventual surrender of planetary governance to super-intelligent systems. Some even suggest such an outcome might outperform current human leadership, given ongoing war, environmental neglect, unresolved poverty, and political self-interest masquerading as democracy.

AI and user interface.

Engagement with AI, in practice, is far more mundane. Most users interact with free language-based platforms in the same way they once used search engines. These systems generate responses by synthesising vast quantities of existing human-produced material: published texts, archived data, and prior interactions. These platforms do not think independently. They operate within constraints set by programmed architecture, training, and design choices made by their creators.

A critical risk for uninitiated users is epistemic transfer: mistaking generated output for truth. AI systems are designed to respond, not to withhold. When information is incomplete, they synthesise plausible continuations. This behaviour, commonly termed a “hallucination,” is not intentional deception but probabilistic output without grounding. The presence of disclaimers acknowledges this limitation.

Within professional practice, disciplined use becomes essential. AI should be treated no differently than other tools: calculators, modelling software, or reference libraries. The user sets direction. Purpose must be established in advance. Without it, the tool determines trajectory.

Clarity of prompting matters. Constraints matter more. Unbounded interaction produces drift. Prompting culture itself has become commodified, with techniques packaged and sold. This is not inherently problematic, but it is transactional. The quality of output reflects the quality of input, but judgment must remain external to the system. Human judgement required.

Critical evaluation is non-negotiable. Generated responses must be examined for alignment, context fidelity, and subtle redirection. Convenience features and task escalation can easily replace original intent if left unchecked. Human judgement required, again.

There are also material considerations. All interactions are recorded. Inputs become part of the system’s data exhaust. This raises legitimate concerns around privacy, security, intellectual property, and creative ownership. In professional and creative environments, this is not abstract. Ideas externalised into proprietary platforms cannot be fully retrieved or contained.

A New Dawn

Against the accelerating digital landscape, a counter-movement is emerging. Not a rejection of technology, but a rebalancing. 2026 is increasingly framed as a return to analogue values. This is not nostalgia. It is a recalibration.

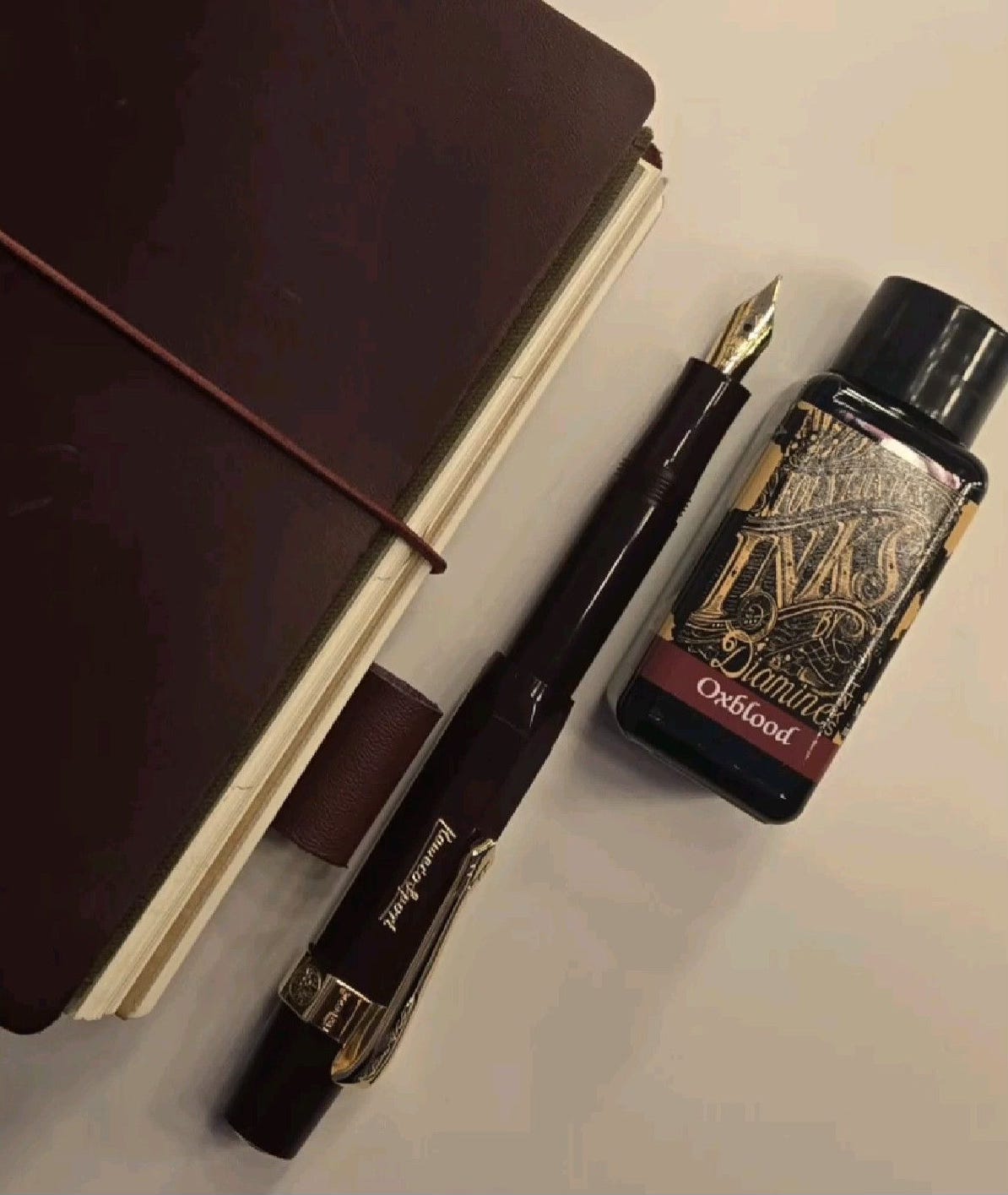

Analogue engagement introduces friction, embodiment, and imperfection. Buying a physical book, browsing shelves, reading with attention. Writing by hand, confronting your own writing illegibility, correcting spelling and increasing your vocabulary, adjusting rhythm.

Using wired headphones, mechanical cameras, acoustic instruments. Instruments that require tuning, coordination, and patience. Objects shaped by human hands from natural materials. These experiences are cognitive. They demand presence. They reinforce continuity between thought, body, and environment.

Such practices are not inefficient accidents; they are conditions under which meaning forms. They strengthen awareness and resist the passive consumption loops of digital environments.

Culturally and commercially, this shift is visible. Lifestyle brands increasingly emphasise craft, material honesty, and limited production. These are not mass-market propositions; they derive value from difference, restraint, and specificity.

AI remains a powerful computational system. Its capacity exceeds the human brain in speed, scale, and pattern extraction. Whether this constitutes intelligence is an open question. Self-directed learning may alter that debate. Yet another dimension remains largely absent from technological discourse: Wisdom.

Wisdom is not optimisation. It is an ancient human companion, It is restraint under capacity. The ability to act less than one could, despite having the means to act more.

The question, then, is not whether AI will become more capable. The issue is whether humans retain the discipline, judgment, and restraint required to remain its master.

This insight was written on 25 January 2026, Robert Burns Night, 267 years after the birth of a poet whose work continues to resonate through human connection to land, labor, and shared dignity. His poetry emerged from lived experience, perception, and vulnerability.

AI can process language. It cannot plough a field, encounter a frightened mouse, and transform that moment into words with enduring human meaning.

Thanks for reading! Please consider subscribing to our free publication to receive new posts and support our work.

HI Rachel. Thank you for your thoughtful comments on this Insight. I am delighted your enjoyed reading it and felt hopeful about our collective human recalibration with AI.

this was a wonderful read that left me feeling hopeful about the way we can interact with AI without losing our innate ability to create, feel and communicate!